Key Takeaways

- Photoshop Beta offers Firefly Image 3 AI model for high-quality image generation.

- Users can create images from text prompts and make nuanced stylistic choices.

- New features include utilizing reference images, generating backgrounds, and making selective edits.

Today, at its MAX London event, Adobe announced a new version of Photoshop Beta with a range of significant new features and improvements. Many of these upgrades rely on the Adobe Firefly Image 3 Foundation model, which the company also detailed today. Adobe says that Firefly Image 3 offers “higher-quality image generations, better understanding of prompts, new levels of detail and variety, and significant improvements that enable fast creative expression and ideation.” It also claims that photorealistic quality is improved with better lighting positioning, detail, text display, and more.

Google Photos Magic Editor: How the AI editor works and where you can get it

Bad Photoshop skills? No worries. Google’s new Magic Editor uses gen AI to erase and replace parts of your photo. But that’s not all it can do.

I’ve been playing around with Photoshop Beta since the announcement to test out just how great these new features may be. While there were more announcements than just these, below are five of the best new features. Remember, though, that these are just available in Photoshop Beta, not regular Photoshop. You can get the Beta version or update your existing Beta app in the Adobe Cloud app.

1 Image creation from text prompts

Create wild images from scratch using just text.

While most know Photoshop’s AI features best for its ability to fill in or replace areas of images automatically, it can now create something entirely from scratch. The new Photoshop Generate Image feature acts like any other AI image generator. You enter a text prompt, and it will generate an image based on that.

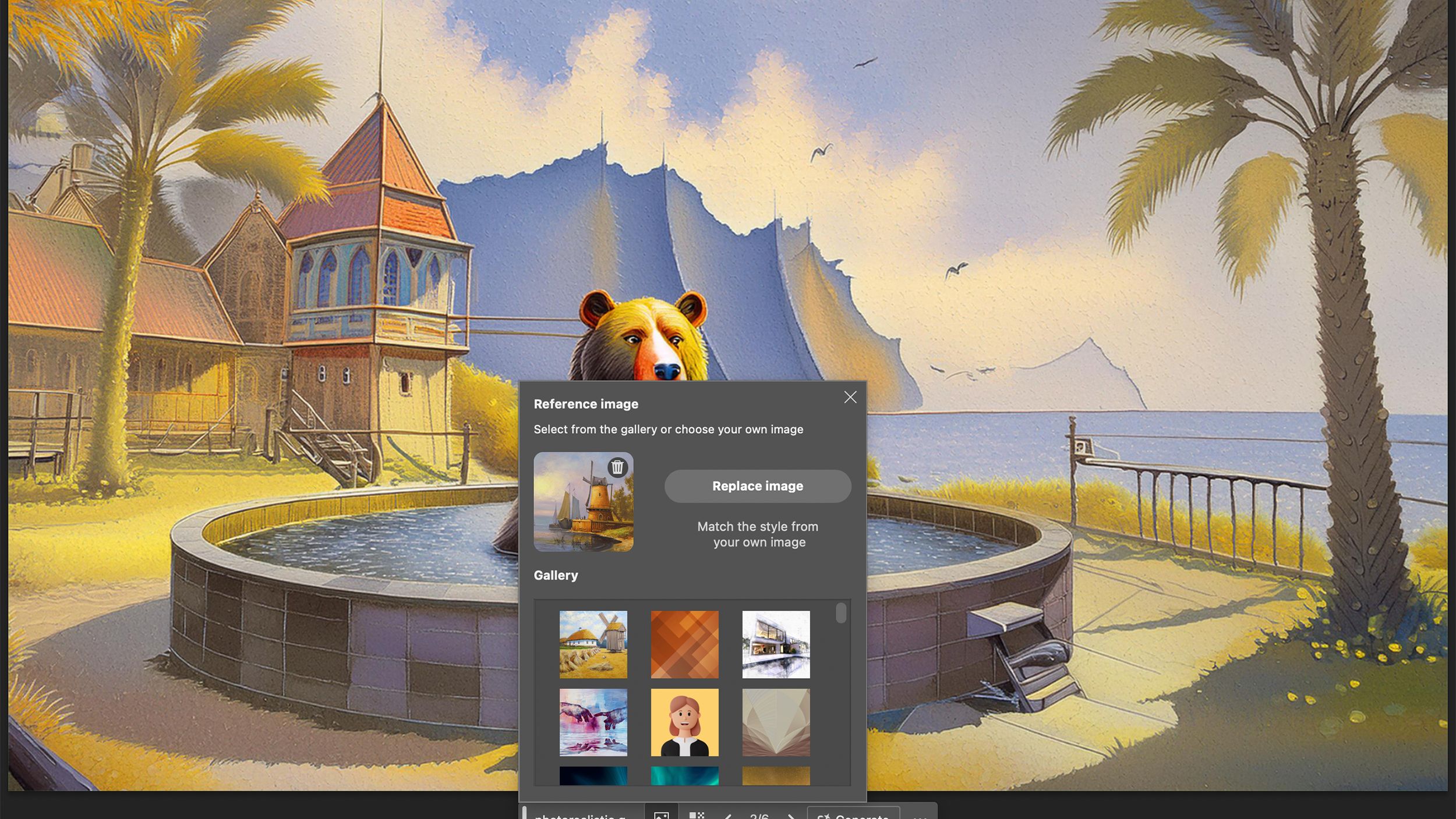

For the images above, I used the prompt “photorealistic grizzly bear lounging in a hot tub outside of a Spanish-style mansion with palm trees and a beach in the background.” I then tested out the ability to choose options that influence style. First, you can select between art and photo. I tried both in my albeit brief testing and had plenty of “Photo” versions that were less photorealistic than the “Art” versions, so I’m not sure how reliable this will actually be for the time being.

You can also choose from quite a few more specific stylistic options. Those include different categories, including art materials (charcoal, claymation, etc.), techniques (double exposure, palette knife, fresco, etc.), effects (bokeh effect, dark, etc.), and more. I didn’t test all of them, but they do promise to change the final output significantly, though some are definitely more subtle.

I also tested out creating a complete portrait from scratch, simply with a prompt of “portrait of a woman in a studio setting with simple lighting.” Sure enough, it provided a photo-quality portrait of a woman in the studio. Then, knowing hands are usually the tell for AI, I tried asking for a full-body portrait in a studio setting. It didn’t quite listen, with two of the three results only from the waist up and the one that was full-body, including for portraits in one. Overall, it was impressive, but there are still the telltale signs of AI in the hands.

2 Use reference images

Use example images to guide the AI results

Another new feature related to Firefly Image 3 is the ability to utilize reference photos. You can either upload your own (Adobe warns you that you need the right to use the image) or use suggested Adobe Stock images. It tends to stick to that reference image pretty closely, though, paying more attention to that than your text prompt. For example, I tried the same bear prompt I did above. Without any reference images, it did an alright job. Then, when I selected a randomly suggested stock image of a painted windmill, it completely ignored the photorealistic part and created a very painterly style image.

3 Replace and generate backgrounds

Turn existing images into something totally new.

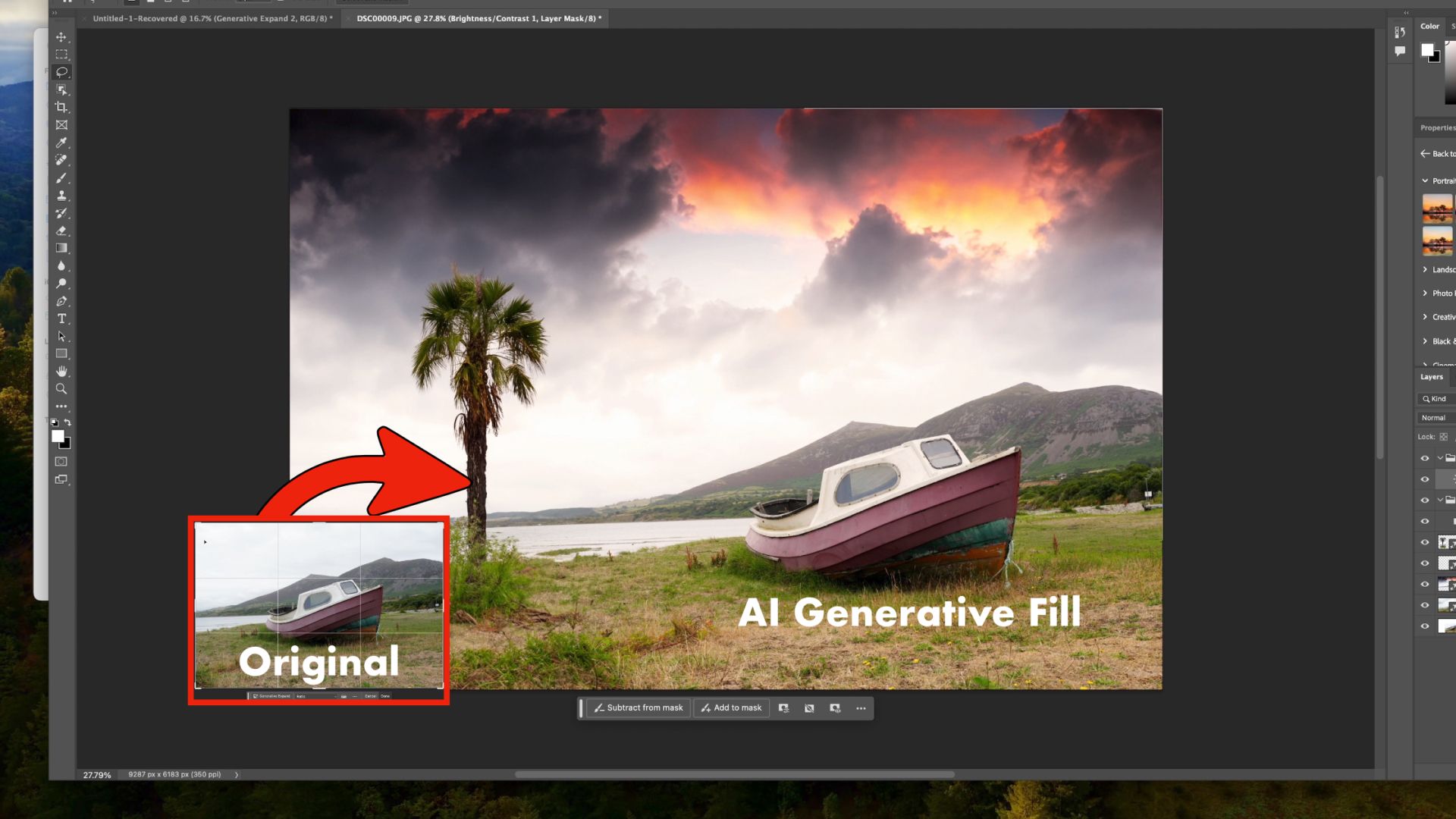

While you could already remove backgrounds in Photoshop, automatically adding and generating new ones was not possible. That is now available in Photoshop Beta, though. I took this photo of my dog on the beach and removed the background, then provided a prompt of “a living room with a couch” for the Generate Background option. It was pretty vague, so I really didn’t expect much, but was completely blown away by the results.

One of the most useful applications of this is for product photographers who want to take one image of their product but replace the background so they don’t have to take multiple images. I tried it out on an image of some watches that I took for a review. It took a few tries of fine-tuning my prompt, but then I was able to get some results that I was pretty happy with. There are still some issues with the shadows, but if you aren’t paying close attention, it’s definitely passable.

1:03

How to use Photoshop Generative Fill: Use AI on your images

Generative fill is a powerful tool for editing your images.

4 Generate variations

While Adobe’s AI always provides at least a few options for each prompt, it now adds the option to “Generate Similar.” With this feature, you can choose the result you like the most, and it will then use that to create more options. This allows you to really narrow in on a result that hits what you are looking for. It also means that you can create similar versions of the same image that could be used in different types of marketing content, for example. I used the same watch image as above, and it actually gave me a more polished result that I like more.

5 Selectively make changes with the Adjustment Brush

Paint on adjustments without permanently changing your image.

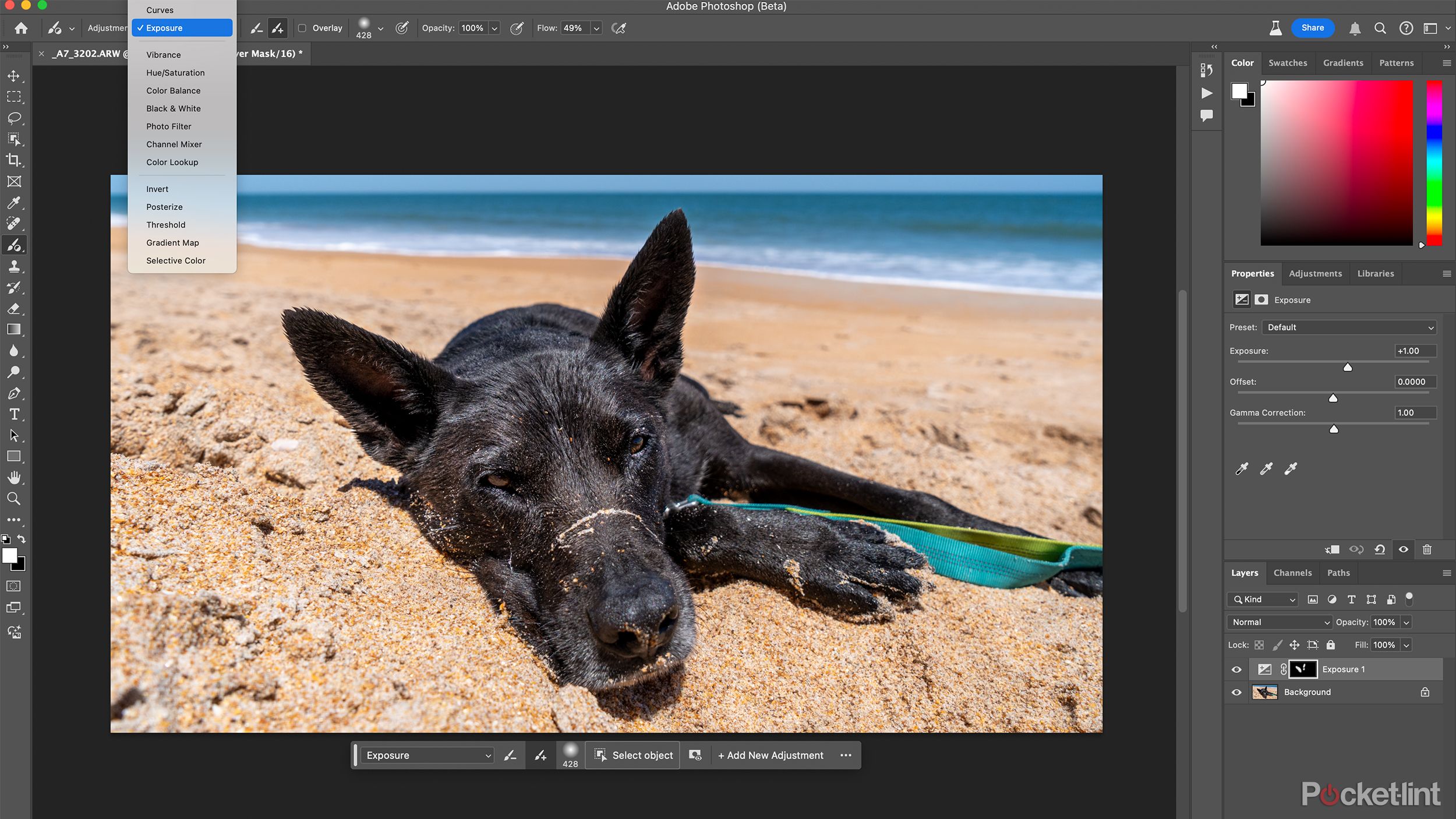

The new release also included some non-AI features. Lightroom users have long had access to the Adjustment Brush, but now the feature has made its way to Photoshop Beta. The brush allows you to paint on adjustments to individual parts of images. If you wanted to do this before, you would have to create a layer that impacted the entire image, add a layer mask, and brush out the areas you didn’t want to have that adjustment (or some variation of this).

Now, using a brush, you can choose between adjustments like curves, exposure, levels, brightness and contrast, color balance, and more. You can even paint on photo filters, gradient maps, and selective color. This feature is non-destructive, automatically creating a new layer with a mask, much like if you were to do so the old way, only faster. While I don’t frequently use generative AI in my work, the Adjustment Brush will be extremely useful on a regular basis.

Trending Products

Cooler Master MasterBox Q300L Micro-ATX Tower with Magnetic Design Dust Filter, Transparent Acrylic Side Panel…

ASUS TUF Gaming GT301 ZAKU II Edition ATX mid-Tower Compact case with Tempered Glass Side Panel, Honeycomb Front Panel…

ASUS TUF Gaming GT501 Mid-Tower Computer Case for up to EATX Motherboards with USB 3.0 Front Panel Cases GT501/GRY/WITH…

be quiet! Pure Base 500DX Black, Mid Tower ATX case, ARGB, 3 pre-installed Pure Wings 2, BGW37, tempered glass window

ASUS ROG Strix Helios GX601 White Edition RGB Mid-Tower Computer Case for ATX/EATX Motherboards with tempered glass…